3D Navigation

February, 2025

During the development of several prototypes at Endeavor One, I realized that we needed an effective way for flying AI characters to navigate three-dimensional space. To address this, I created an Octree-based navigation grid system which I then used to create an attacking Bat AI.

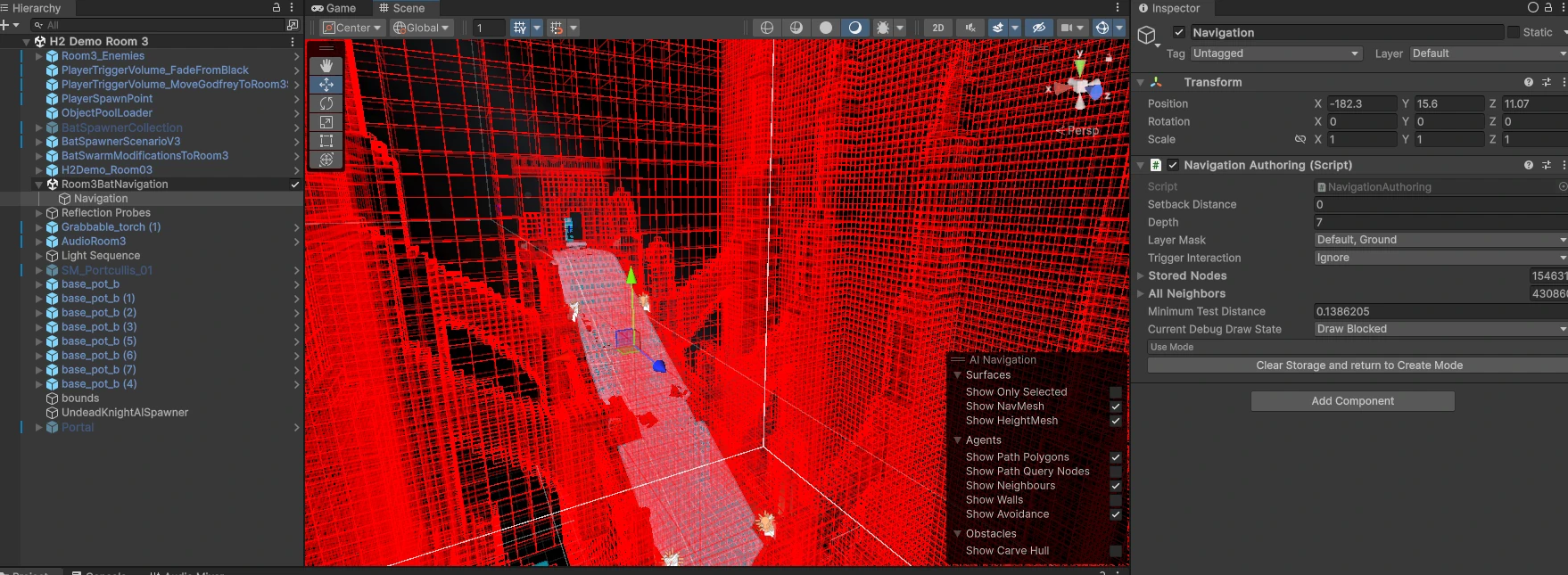

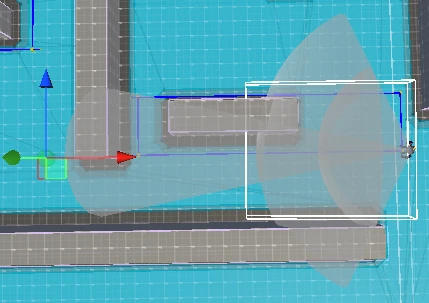

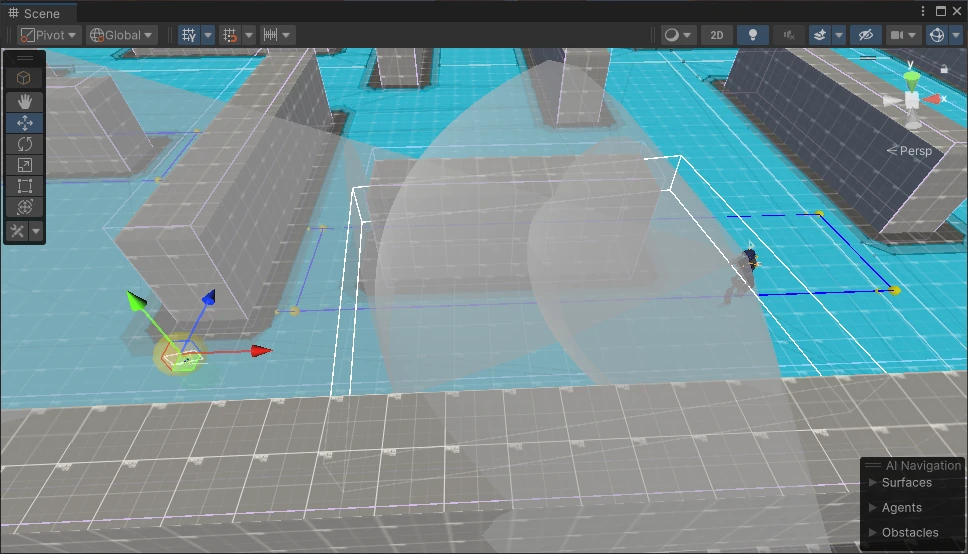

This image illustrates part of the Navigation Mesh generation in the Unity editor. Mesh generation involves the following steps:

-

An axis-aligned bounding box is placed in a Unity Subscene using the Navigation Authoring component.

-

Collision tests are performed using the smallest possible box size defined by the Octree settings. This helps determine which areas contain obstacles and which are open.

-

These small collision boxes are then merged into larger boxes that fit within the Octree's bounding box. This step helps group together contiguous areas. The red boxes in the image represent the "blocked" areas at this stage.

-

I then found all the connections between adjacent boxes that are free of obstacles, establishing valid paths for the AI.

-

The navigation data is then baked (serialized) into a format compatible with the Entity Component System (ECS).

At runtime, an Entity Component System loads the data, accepts pathfinding requests and generates paths using an Burst optimized and parallelized A* pathfinding algorithm.

The result? Bats.

Lots and lots of bats.

State Machine Tool

February, 2025

At Endeavor One, one of my key responsibilities was managing a wide variety of AI systems. Most of these AI were controlled by state machines or behavior trees—often systems that I reworked to improve the performance of prototypes initially created by designers using visual tools, such as Opsive’s Behavior Designer.

While visual tools are great for quickly prototyping ideas, they have significant limitations when it comes to optimizing AI for production. Specifically, they struggle with efficiently handling large numbers of AI entities at runtime.

The challenge with rewriting these systems myself was that it effectively "locks them in," since any further adjustments would require a programmer to be involved.

To solve this, I developed a Visual State Machine system that allowed designers to build and tweak AI state machines using a GUI. This system offers the flexibility of prebuilt components, enabling designers to adjust behavior without needing a programmer for every change.

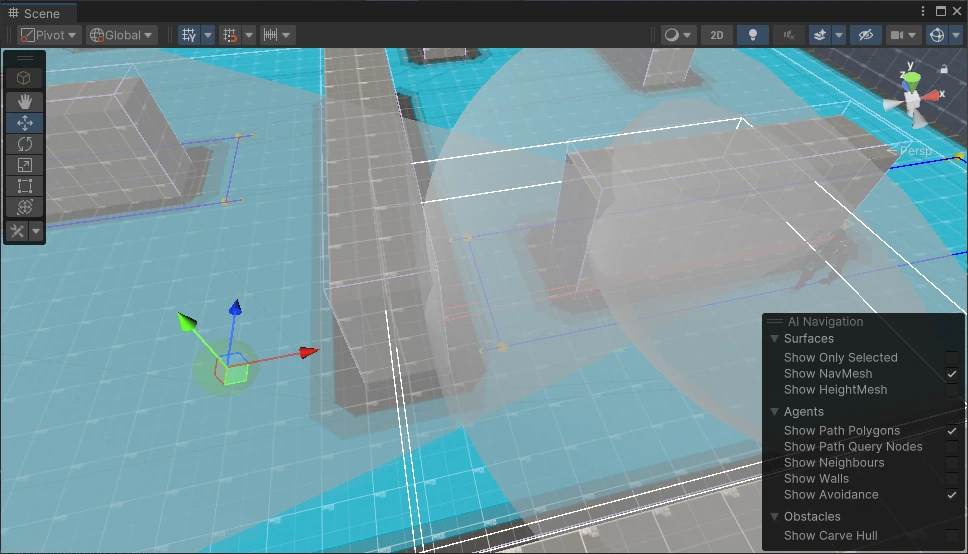

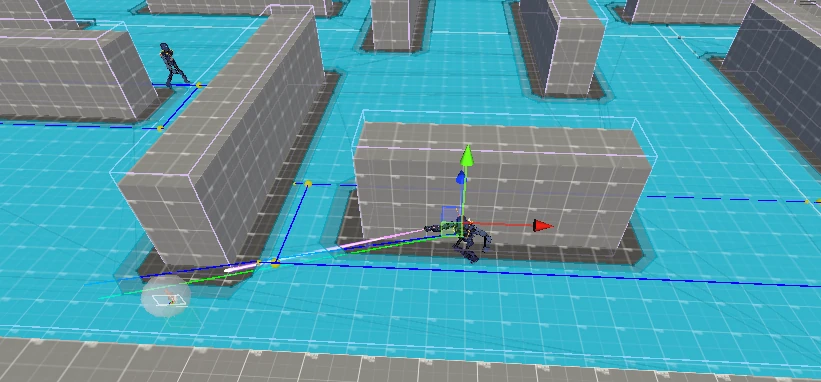

This image illustrates a basic example of the Visual State Machine tool in action, connected to an AI in debug mode.

In this case, the patrolling AI is in the MoveToLocation state, waiting for the ArrivedConsideration to be satisfied. When it reaches the patrol point, ArrivedConsideration evaluates to true, triggering the SetPatrolDestination state. When that State’s HasPathConsideration evaluates to true, it switches back to the MoveToLocation state.

This design supports hierarchical states (A StateMachine that executes within a state) and multiple paths between states.

The underlying StateMachine is built out of simple C# classes making them fast, small and efficient.

AI Senses

June, 2024

I have professionally developed a number of systems for mimicking senses like vision and hearing in video games. Code efficiency, accuracy and the exact parameters of the senses change wildly from project to project, so I developed a configurable system that lets you specify a number of different shapes for specific senses and then uses some simple, high-performance algorithms to make vision or hearing tests at runtime.

This image is a top-down look at an AI character’s vision with the debugging options turned on. In this example the character’s vision is represented by three cones. I have found that the debugging representation of the senses is vital to tracking down issues. This system has features that can depict sense-shapes either in the editor, or in a final build if certain debug options are enabled.

Each cone can have a different "visibility" value if a Detectable is found within it. This allows objects in nearer cones to be considered more "visible". The selected green sphere in this image is a debug representation of a Detectable. It is currently green because it has not been detected.

In this image the detectable is within the vision cones but behind a wall. Two red lines indicate the vision raycasts that are performed to detect obstacles between the Viewer and the Detectable. Notice that the Detectable is still colored green because it has not yet been spotted.

In this image the Detectable is within the most distant vision cone. Its debug color has changed to yellow because it has been partially detected. At this point the vision information is fed into an “Aggression” system. Aggression increases during partial detection which switches the AI into either an Investigate or a Pursue state.

When Aggression has increased enough, the AI enters a combat state where it will remain until it loses the Detectable.

Armageddon Road

April, 2011

Armageddon Road was a project, built in the Garage Games engine, that I worked on for a few years. The idea was to combine a 3rd-person brawler and first-person shooter.

Over the course of the project, I modeled and rigged the primary character in 3D studio Max, including several clothing options.

I wrote most of the enemy AI which uses an A* algorithm to navigate through an underground facility.

Other interesting aspects include a complete inventory and weapon system.

Multitouch Game

July, 2009

As a contractor at Microsoft, I wrote a small game engine in DirectX 10 to drive an example game that demonstrated a Microsoft Vista multitouch API.

I wrote all the C++ code for loading and rendering all the models in the game in addition to all the game rules and gui programming.

Fractured

October, 2004

This game prototype was constructed as part of my duties as Lead Programmer for a small company called BYF games.

I built the animation and combat systems using the Lithtech Jupiter game engine.

Older Stuff

Various

I have been building game prototypes most of my life. If you really want to go dumpster diving check out some of these very old prototypes, ancient websites and various other juvenilia:

Armageddon Road Exterior Demo Video